- Like

- SHARE

- Digg

- Del

- Tumblr

- VKontakte

- Flattr

- Buffer

- Love This

- Save

- Odnoklassniki

- Meneame

- Blogger

- Amazon

- Yahoo Mail

- Gmail

- AOL

- Newsvine

- HackerNews

- Evernote

- MySpace

- Mail.ru

- Viadeo

- Line

- Comments

- Yummly

- SMS

- Viber

- Telegram

- JOIN

- Skype

- Facebook Messenger

- Kakao

- LiveJournal

- Yammer

- Edgar

- Fintel

- Mix

- Instapaper

- Copy Link

If the individuals who are at the very forefront of artificial intelligence technology are commenting about the potential catastrophic effects of highly intelligent AI systems, then it’s probably wise to sit up and take notice.

Just a couple of months ago, Geoffrey Hinton, a man considered as one of the “godfathers” of AI for his pioneering work in the field, said that the technology’s rapid pace of development meant that it was “not inconceivable” that superintelligent AI — considered as being superior to the human mind — could end up wiping out humanity.

And Sam Altman, CEO of OpenAI, the company behind the viral ChatGPT chatbot, had admitted to being “a little bit scared” about the potential effects of advanced AI systems on society.

Altman is so concerned that on Wednesday his company announced it’s setting up a new unit called Superalignment aimed at ensuring that superintelligent AI doesn’t end up causing chaos or something far worse.

“Superintelligence will be the most impactful technology humanity has ever invented, and could help us solve many of the world’s most important problems,” OpenAI said in a post introducing the new initiative. “But the vast power of superintelligence could also be very dangerous, and could lead to the disempowerment of humanity or even human extinction.”

OpenAI said that although superintelligent AI may seem like it’s a ways off, it believes it could be developed by 2030. And it readily admits that at the current time no system exists “for steering or controlling a potentially superintelligent AI, and preventing it from going rogue.”

To deal with the situation, OpenAI wants to build a “roughly human-level automated alignment researcher” that would perform safety checks on a superintelligent AI, adding that managing these risks will also require new institutions for governance and solving the problem of superintelligence alignment.

For Superalignment to have an effect, OpenAI needs to assemble a crack team of top machine learning researchers and engineers.

The company appears very frank about its effort, describing it as an “incredibly ambitious goal” while also admitting that it’s “not guaranteed to succeed.” But it adds that it’s “optimistic that a focused, concerted effort can solve this problem.”

New AI tools like OpenAI’s ChatGPT and Google’s Bard, among many others, are so revolutionary that experts are certain that even at this pre-superintelligence level, the workplace and wider society face fundamental changes in the near term.

It’s why governments around the world are scrambling to play catchup, hurriedly moving to impose regulations on the rapidly developing AI industry in a bid to ensure the technology is deployed in a safe and responsible manner. However, unless a single body is formed, each country will have its own views on how best to use the technology, meaning those regulations could vary widely and lead to markedly different outcomes. And it’s these different approaches that will make Superalignment’s goal all the harder to achieve.

Editors’ Recommendations

- OpenAI reveals location of its first international outpost

- NY lawyers fined for using fake ChatGPT cases in legal brief

- GPT-4: how to use the AI chatbot that puts ChatGPT to shame

- AI ‘godfather’ says fears of existential threat are overblown

- Senators to get AI lessons ahead of regulation decisions

![]()

Not so many moons ago, Trevor moved from one tea-loving island nation that drives on the left (Britain) to another (Japan)…

ChatGPT creator seeking to eliminate chatbot ‘hallucinations’

Despite all of the excitement around ChatGPT and similar AI-powered chatbots, the text-based tools still have some serious issues that need to be resolved.

Among them is their tendency to make up stuff and present it as fact when it doesn’t know the answer to an inquiry, a phenomenon that’s come to be known as “hallucinating.” As you can imagine, presenting falsehoods as fact to someone using one of the new wave of powerful chatbots could have serious consequences.

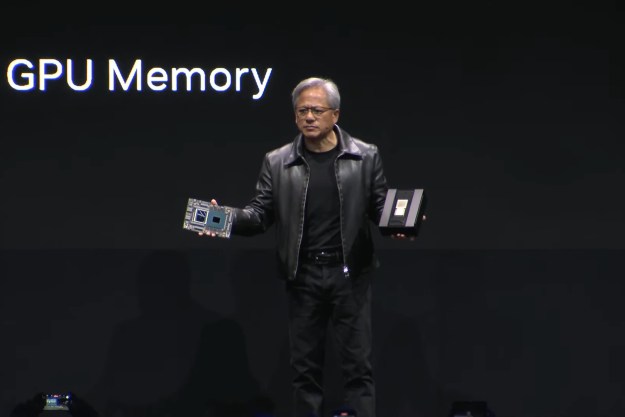

Nvidia’s supercomputer may bring on a new era of ChatGPT

Nvidia has just announced a new supercomputer that may change the future of AI. The DGX GH200, equipped with nearly 500 times more memory than the systems we’re familiar with now, will soon fall into the hands of Google, Meta, and Microsoft.

The goal? Revolutionizing generative AI, recommender systems, and data processing on a scale we’ve never seen before. Are language models like GPT going to benefit, and what will that mean for regular users?

This new Photoshop tool could bring AI magic to your images

These days, it seems like everyone and their dog is working artificial intelligence (AI) into their tech products, from ChatGPT in your web browser to click-and-drag image editing. The latest example is Adobe Photoshop, but this isn’t just another cookie-cutter quick fix — no, it could have a profound effect on imagery and image creators.

Photoshop’s newest feature is called Generative Fill, and it lets you use text prompts to automatically adjust areas of an image you are working on. This might let you add new features, adjust existing elements, or remove unwanted sections of the picture by typing your request into the app.